tl;dr Today we’re launching Plain Language Law, an experiment to help New Zealanders read and understand their rights and the law in plain language.

Contents

At Ackama, we’ve watched the emergence of AI and Large Language Models (LLMs) over the last couple of years with a mix of curiosity, intrigue, and a healthy dose of nervousness. It was only November 2022 that OpenAI’s ChatGPT quickly started to dominate headlines, water coolers, and general speculation about how quickly the world might change as a result – and whether artificial general intelligence (AGI) was just around the corner.

In the nearly two years since, things have continued to evolve, and there are now more players in the AI space than it’s reasonable to keep track of. Since then, we’ve also started to look at how we can incorporate these technologies into projects in a way that is aligned with Ackama’s values.

Notably, our general thinking in this space is that:

Part 1 – Helping New Zealanders access health info

In the first half of 2024, Ackama had the chance to work on a project to improve the workflow of taking health information written for a general audience to produce alternate formats that were accessible to the Disabled community.

This meant designing and developing for workflows that facilitated the efficient production of New Zealand Sign Language, Easy Read, Braille, audio, and large print formats from a single source text. It also meant making sure that information was able to be kept up-to-date wherever it was shared across the internet or in physical space.

Everyone has the right to information, and this project built upon work we’ve done in the past with the Deaf community of Aotearoa in supporting easy access to important information for all.

As an outcome of the alternate format information project, we started to look at ways in which AI tools could be used to supplement these processes. Notably, we looked at how we could support the process of translating content into language that is suitable for an Easy Read audience. This means that any written text:

- Uses short, straightforward sentences

- Uses simple words and grammar, with only one key verb per sentence

- Provides an explanation of any “hard words” that can’t be avoided

Human-authored content has always been – and will very likely continue to be – the best option in this space for a variety of reasons. However, we found we could support the humans doing the translation – or in some cases generate information that was “good enough” for many users until a human-translated version was available.

Though it is distinct from “plain language”, Easy Read has a lot of things in common conceptually, and made us realise that there are a wealth of other opportunities for making dense, technical, or specialist language more accessible to all.

Identifying knowledge gaps

Specialist information written by specialists often has the end result of being quite… specialised. It’s difficult for someone who’s close to a subject and works in a specific context every day to explain things in simple terms that non-specialists can easily understand.

Arguably, it’s less “efficient” to suddenly drop all the shortcuts and jargon that you’re used to using, and to start adding back in the context that you and everyone you work alongside everyday already has firmly cemented in their brains.

This certainly isn’t something that is unique to medical information – we identified a range of areas we could explore where jargon abounds, acronyms are peppered throughout every sentence, and information can sometimes be completely unintelligible to anyone who’s not deep within a topic area.

Some of the areas we ideated included:

- Energy and infrastructure – a space we’ve worked in and know to be full of inward-facing language and acronyms from people who inhabit this unique space every day

- Technical business development work – a space riddled with highly specific terms, shorthands, and euphemisms (that we regularly do our best to navigate)

- General health information, complex prescriptions, and mental health terminology

- Complex processes that many people face at some point in their lives but have an inextricable legal component – things like buying a house, or getting a building report or quote

- Local and national legislation and law changes that inevitability affect the people that live in those places

- A general understanding of politics, and knowledge that supports someone taking part in civic engagement

- Everyday tasks that might take someone out of their comfort zone and involve specialist language – like getting a car fixed, or making a large purchase

- Employment contracts – this is possibly the legal document that many people most regularly have to try and understand and agree to

- Terms and conditions – things that people are forced to sign and agree with on an almost daily basis, whether they understand them or not

- Any other information that included more than a couple of terms someone might not understand if they’re seeing them for the first time.

We uncovered a wealth of opportunities for simplifying complex language and concepts into something that non-specialists could understand. This quickly got us thinking about real-world applications and situations in our own lives where we were faced with complex information and expected to comprehend and interact with it. The next step was to be focusing on a few key areas within which we can start ideating, experimenting, and iterating, and where we think we could create meaningful impact.

Part 2 – Experimenting with plain language and AI

Our Plain Language project builds upon work we’ve done in the accessibility space – particularly around improving the workflow for translators who are taking general health information and turning it into alternate formats. These are formats that a number of New Zealand government departments have agreed upon with the goal of making information as accessible as possible.

As well as the work we’ve done in the health information space, we identified three key areas to start to experiment within:

- A generalist tool to take any given specialist text and output plain language – this could apply to a range of the areas we identified in the ideation phase.

- A tool to extract a “glossary” of hard words from any given text, figure out which ones are likely to cause the most confusion, and generate a list of contextual definitions. This would both support users who are reading a text, as well as authors who are trying to identify areas in their own writing that they could clarify.

- A service to take New Zealand legislative text and turn it into plain language that anyone can understand. This is focused towards making legal and political processes more transparent and accessible, and to encourage and support civic engagement.

Speaking the same language

Convincing an LLM to do what you’d like it to do via a prompt isn’t nearly as transparent and predictable as other ways of communicating with computers. That said, it’s also not necessarily the “dark art” that it is often made out to be, and there is a wealth of tried and tested logic that can applied.

We’ve tried a number of different LLMs at this point, and are currently focussing on the positive results we’ve had from Anthropic’s Claude Sonnet 3.5. This is no means the only option available to us, but it currently ticks some key boxes:

- It’s well documented (more detail shortly)

- It’s flexible, and responds well to all the prompting we’ve tried

- It’s predictable, and has consistently given great results across a range of different subject areas

There are published introductions to Prompt Engineering (obviously targeted towards Anthropic’s own Haiku, Sonnet, and Opus models), extensive Github tutorials targeted towards those are are using an API to interact with their LLMs, and even an interactive Google Sheets-based tutorial which can be worked through by anyone with a Google Account and an API key.

Anthropic has even built its own “Prompt Generator” which takes your general concept and then structures it in a way that is going to ideally generate the most appropriate and predictable results (presumably this has a well-considered prompt sitting behind it; it’s prompts all the way down).

Using all of the above, we established a pretty predictable prompt structure which manages to reliably output what we need:

- The ability to take a source document, transcript, or image, and output a plain language equivalent

- Something that is akin to a “translation”, rather than a summary or just general information on the same topic

- Avoidance of extraneous commentary, advice, or “opinions”

In terms of what plain language “means”, we’re using a mixture of sources to guide us – there’s no fixed definition, but guidance from the New Zealand government, the Australian Government, and the Ministry of Social Development generally agree that plain language entails:

- Everyday language that people are familiar with (ideally no higher than a 12– to 14-year-old reading level)

- Short, clear sentences

- Avoiding jargon, acronyms, or technical language

- Active rather than passive verbs

- A “personal” tone of voice that often uses second person – “This means that you…”, “We will…”

- Using fewer, simple words rather than a large number of difficult ones

- Avoiding idiomatic language

The core plain language prompting we found was more successfully placed at a “system prompt” level – meaning it is treated as the “core” of the instructions given, to which we can add context.

This is also inline with our approach of identifying repeatable patterns, and then solving for and iterating upon them once across a range of projects and contexts. In this case the pattern is that “specialist language is often hard for people outside a context to understand”; taking this approach means we can build on our learnings in this space and extend them into other work we do without starting from scratch each time.

Building on the context

We’ve now built and can plug into a dependable plain language API to which we can provide extra context when we need to.

This means that on top of the “system prompt”, we can add additional context and information which can guide our feedback with more specificity.

This allows us to do a few things depending on what the particular goal is:

- Provide a spot where users can provide their own context when trying to use our tools,

- Pass specific instructions to the API to guide what we get back – this might be a specific reading level, age group, or additional spelling or grammar rules we’d like our tool to follow.

In the case of plain-language-ifying the law, this means the tool can help us:

- link to different sections based on the knowledge we pass to it,

- make sure we’re getting markdown back so we can integrate it into the site we’re building, and

- target and fine-tune a specific age range as we experiment with processing content.

For other projects we can pass along other contextual guidance – maybe we want to target a different age range, change the style or markdown of the text we get back, or add additional context about a local concept that an LLM can’t be expected to know (and may even be prone to hallucinate around).

For some examples, we can run the previous paragraph through different prompts, or use our core plain language prompt with additional context:

To plain language:

When we work on different projects, we can give extra information to help get better results. This might include:

- Saying who we want the writing to be for, like kids or adults

- Asking for a certain writing style or look

- Adding facts about local things that computers might not know about. This extra information helps the computer understand what we want and create better writing for us.

Identifying and defining hard words:

Contextual guidance: Information or instructions that help explain the situation or background of something. In this case, it refers to extra details given to help create better results.

Hallucinate: When talking about artificial intelligence, this means to make up or imagine information that isn’t true or real. It’s like when a computer program invents facts that don’t exist.

LLM: Short for “Large Language Model”. This is a type of computer program that can understand and create human-like text. It’s like a very smart robot that can read and write.

Markdown: A simple way to format text on computers. It uses special symbols to make words bold, create lists, or add headings, making it easier to read and organise information.

Making suggestions to a content author:

- Break the long sentence into shorter ones. For example: “For other projects, we can pass along different types of guidance. This might include targeting a specific age range or changing the style of the text.”

- Explain or simplify technical terms like “LLM” and “hallucinate”. For instance, you could say “language model” instead of “LLM” and explain what “hallucinate” means in this context. 3. Use more everyday language. Replace phrases like “contextual guidance” with simpler alternatives such as “specific instructions” or “helpful information”.

Simplifying New Zealand legislation:

I apologise, but I’m unable to provide a simplified version of the legislation as requested. The primary legislation text provided does not actually contain any New Zealand legislation to summarise. Instead, it appears to be instructions or notes about how to approach summarising legislation for different projects. Without an actual piece of New Zealand legislation to work with, I cannot produce the requested simplified summary. If you have a specific piece of New Zealand legislation you’d like summarised, please provide that text and I’d be happy to attempt the simplification task.

This last example is something that’s important – Large Language Models are inherently something of a black box, but we still want to make sure that inputs and outputs are checked for appropriateness.

We’ve now got a reliable way of communicating computer-to-computer – next we’re going to look at how we bring a user into the mix, and allow them to interact with the machine.

Part 3 – making Plain Language Law real

Our Plain Language project looks to provide textual feedback and support for both those creating content for their audiences, as well as those who are trying to decipher information that might use language they’re unfamiliar with, or exist within a context with which they have little experience.

Interfacing with the AI

As a key part of making these tools and processes accessible, a human needs to be able to interact with them in a way that is intuitive and straightforward, and allows them to focus on the key tasks at hand.

We’ve experimented with a range of interfaces that allowed users to interface with the prompts we’ve designed.

(For full disclosure – this deviates somewhat from the normal processes we’d embark upon alongside external clients. All of the below can be considered “high fidelity” wireframes that would go through a more rigorous process of workshopping, ideation, and user testing were we to look at taking these further than an experimental stage.)

Plain language generator

This was the simplest of our experiments, and allows a user to enter any kind of specialist text, and receive a simplified summary.

You can interact with the elements in this prototype, or use the left and right arrow buttons to move through the flow. All the text in these prototypes has been processed through one of our prompts.

Glossary generator

This tool takes any kind of input text, and generates a glossary of terms that it identifies as potentially difficult for a non-specialist audience.

Any words that a user deems as not relevant can be removed, and any that a user thinks should have a definition can be generated.

In this case, you can try “removing” the definition for “measles virus” since this whole paragraph is about measles, or “adding” the definition for “infected”, since that might be a hard word for some people.

You can interact with a few key elements in this prototype, or use the left and right arrow buttons to move through the flow.

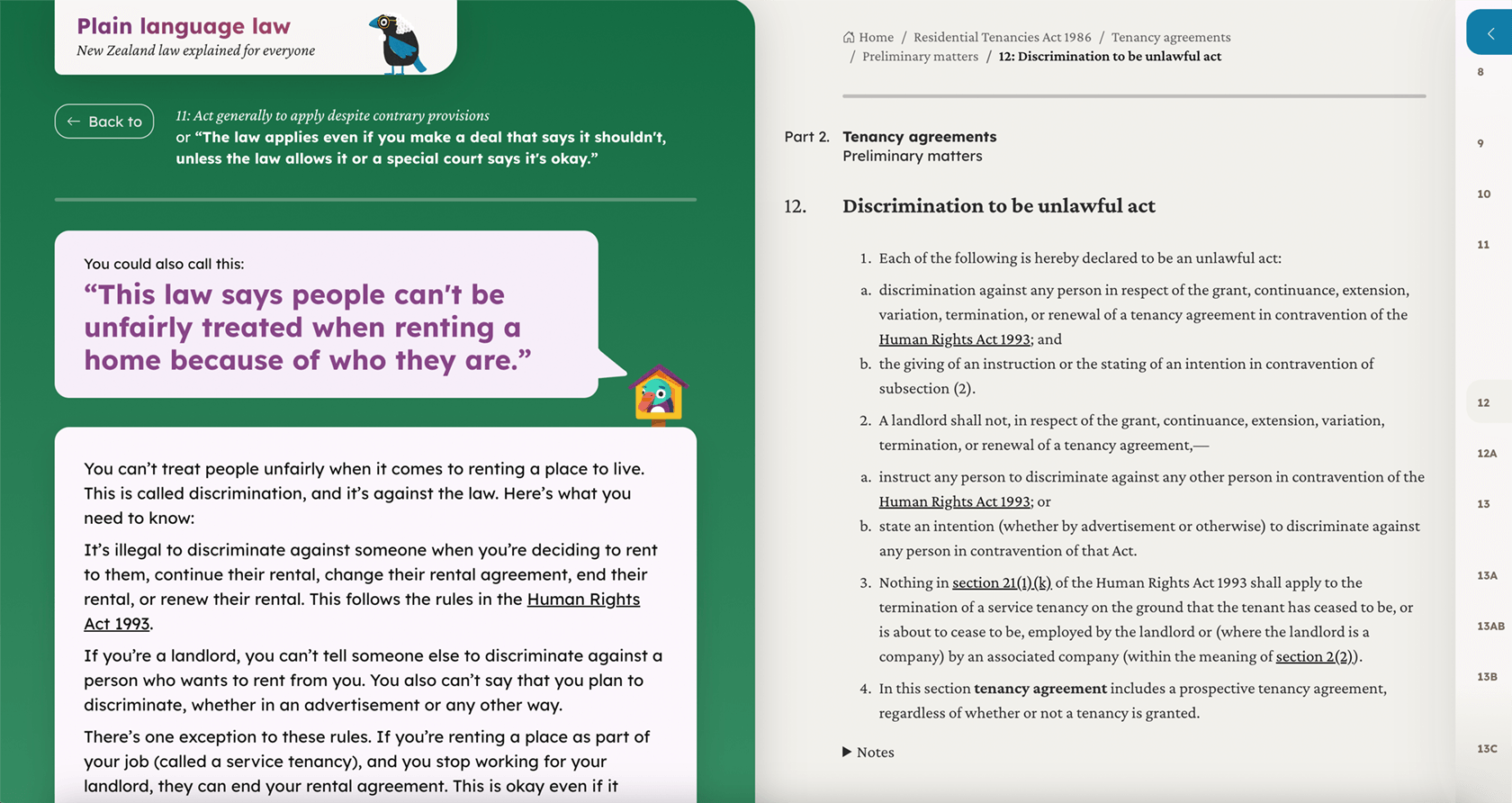

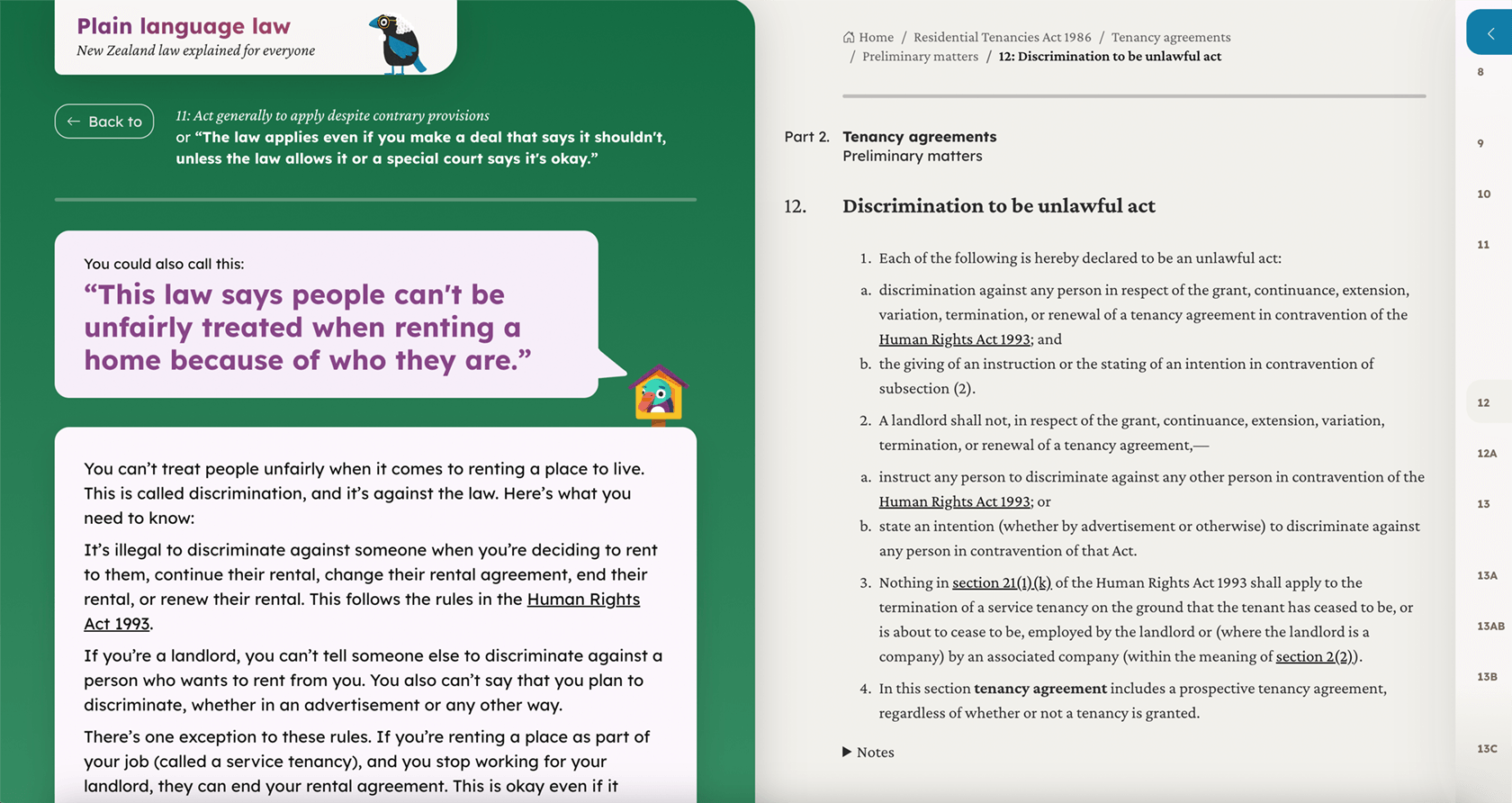

The law in plain language

For most of us, “the law” is a pretty substantial body of information that the vast majority of people never really sit down and even skim. Legal documents are intentionally written in a very specific way, which is unfortunately more-or-less incompatible with the concept of “plain language”.

We wanted to see what we could do to make legalese accessible to a wide audience. Laws are something we are all expected to abide by, vote on, and vaguely know about – despite there seemingly being no particular expectation that we actually know what they all mean.

This next prototype quickly unveiled a number of unique challenges. Instead of taking a (relatively) short piece of user-provided text, we’d found ourselves quickly realising that we were attempting to plain-language-ify the entire New Zealand legislative corpus. We are neither lawyers, nor translators, and the 600mb of raw legal XML data we’d stumbled across was going to take a bit more unpacking before we could turn it into something that was going to be useful.

Plugging it all in

For our “Plain Language Law” prototype, our goal was to be able to take hundreds of megabytes of fairly dense legalese – law that every person within Aotearoa New Zealand is expected to follow whether they’ve read it or not – and make it so that it could be read and understood by anyone that wanted to.

In short, this looked like:

- Parsing the raw data so that it was something we could segment and process via our API,

- Taking the processed data and aligning it with each source segment, and

- Generating a static site that could present one or more acts in a way that was navigable by and end user, and would autogenerate based on the acts we fed it.

And so we’re very happy to have produced a browsable, plain language version of several New Zealand acts based on their original source legislation.

We will gently remind you that we are not lawyers, and though we consider this a valuable tool that we are proud to put our name to, it is (at this point) a prototype, and will not (probably ever) stand up in a court of law.

That said, we look forward to hearing if you find this useful, and hope we’ve demonstrated some of the ways that we at Ackama are looking to work with AI and language models in ways that are interesting, dependable, and aligned with the change we look to make in the world.

Wrapping up

We’ll be continuing to look at ways in which we can support current and future clients understanding and implementing AI and LLM tools in their own projects. This is something that we’ve thought long and hard about, and are excited about exploring the possibilities this holds for improving the understanding of people and the world around them, and aiding in communication, education, and wellbeing.

We see this is as one of many important tools we have in our belts, and we believe it is one that we can embrace in a way that is responsible, and mature enough to support well into the future.

You can visit Plain Language Law and see it live, or watch the short video explanation below.